2-Way Audio (No SDK)

Introduction

Eagle Eye Networks 2-Way Audio enables communications between a client and a far end camera or cameras with associated audio devices such as speakers and microphones. 2-Way Audio uses WebRTC to deliver peer-to-peer communications.

This guide describes how to implement 2-Way Audio using the 2-Way Audio Signaling Service without an SDK, however using an SDK is strongly recommended. They can be found in the SDK section. All examples are written in JavaScript assuming an HTML front-end.

Prerequisites

You will need the following to use and verify a 2-Way Audio integration:

- You should be logged in and have an OAuth JWT access token

- The account should have a camera that is associated with the speaker

- The bridge version should be 3.8.0

- A framework or library that supports Web Sockets and provides a WebRTC library

1. Get Feeds Object

Fetch the /feeds endpoint for the camera or other associated device that you wish to use 2-Way Audio with. The feed object has important information for how to locate and identify the appropriate cloud resources.

let feed = {

"deviceId": "100abcde",

"webRtcUrl": "wss://edge.c000.eagleeyenetworks.com",

"type": "talkdown",

"id": "100abcde-talkdown",

"mediaType": "halfDuplex"

}2. Open Web Socket Connection

Open a Web Socket connection to the address specified in the feeds object: webRtcUrl. This Web Socket service is known as Signaling Service. As soon as the connection is open, send your JWT access token using the following format:

{

destination: {

type: "service",

id: "edge",

},

payload: {

eventName: "authorize",

eventData: {

token: "<accessToken>",

},

},

}If the connection is successful, an authorized confirmation will be sent to the client:

{

"source": {

"type": "service",

"id": "edge",

},

"payload": {

"eventName": "authorized"

}

}If your token is invalid, or an authorize message was not sent promptly to the Signaling Service (within 5 seconds), the following message will be sent to the client and the web sockets connection will be closed.

{

"source": {

"type": "service",

"id": "edge",

},

"payload": {

"eventName": "error",

"eventData": {

"status": "unauthorized",

"context": "authorization",

"message": "Token not valid",

"detail": {

"reason": "tokenIsNotValid",

}

}

}

}See below for more information about error handling.

3. Request ICE Servers

Since 2-Way Audio uses a peer-to-peer connection, negotiation between the peers will need to occur so they can find a way to connect. Signaling Services provides this information upon request, once a valid web sockets connection is established. Send the following message from the client. Note that we also send deviceId here - this is the same deviceId present in the feeds object.

{

"destination": {

"type": "service",

"id": "talkdown",

"routeHint": deviceId

},

"payload": {

"eventName": "getIceServers"

}

}The response from Signaling Services will contain the ICE servers. It's possible for an error to be returned as well, in which the call should be failed.

{

"source": {

"type": "service",

"id": "talkdown",

},

"payload": {

"eventName": "iceServers",

"eventData": {

"iceServers": [iceServers, ...],

"ttl": 0

}

}

}Instantiate your WebRTC library of choice with the iceServers portion of the response. An HTML DOM element is also bound for handling the audio output. For more information about this particular API please reference Mozilla's documentation. Enabling autoplay in the DOM element is recommended for full duplex audio: <audio autoplay />.

let peerConnection = new RTCPeerConnection({iceServers});

const stream = await navigator.mediaDevices.getUserMedia({

audio: !!audioDom,

video: false,

});

// add event listeners for the webRTC connection

peerConnection.addEventListener('track', (event) => {

if (event.track.kind === 'audio' && audioDom !== null) {

[audioDom.srcObject] = event.streams;

}

});

if (audioDom !== null) {

peerConnection.addTransceiver('audio', {direction: 'recvonly'});

}

for (const track of stream.getTracks()) {

peerConnection.addTrack(track);

}The peerConnection object is now established.

4. Prepare Listeners

Before starting a call, it will be important to add listeners to handle specific events during the call. Notably, we'll need to handle connection state changes and ICE candidate messages.

Connection State Changes

It is recommended that the call is terminated if the connection state indicates it is closed or failed. In JavaScript, the listener is placed on the peerConnection we established earlier.

peerConnection.onconnectionstatechange = () => {

const state = peerConnection.connectionState;

if (state === 'failed') {

throw new Error();

}

if (state === 'closed') {

// Stop the call

}

};ICE Candidate Messages

Throughout the call, ICE candidates messages will be received from and sent to Signaling Services. The format of the iceCandidates message is the same for both receiving and sending. Note that we also send deviceId here -- this is the same deviceId that was present in the feeds object.

{

destination: {

type: 'service',

id: 'talkdown',

routeHint: deviceId,

},

payload: {

eventName: "iceCandidates",

eventData: {

iceCandidates: candidates,

},

},

}In the case of receiving ICE candidates, it is critical they are applied to the peerConnection object. In JavaScript, this is done by instantiating a new RTCIceCandidate and then adding that to peerConnection.

let handler = (eventData) => {

for (const ice of eventData.iceCandidates) {

const candidate = new RTCIceCandidate(ice);

peerConnection.addIceCandidate(candidate);

}In the case that our peerConnection object emits the onicecandidate event, we'll need to send a message to Signaling Services. Don't forget to include the deviceId.

peerConnection.onicecandidate = async (event) => {

if (event.candidate === null) {

return;

}

await signalingServiceWebSocket.send({

destination: {

type: 'service',

id: 'talkdown',

routeHint: deviceId,

},

payload: {

eventName: "iceCandidates",

eventData: {

iceCandidates: [event.candidate],

},

},

});

};Session Created

There is one more listener that will be required to establish the call, the sessionCreated event, but since it is closely tied with sending the SDP offer, the details are included below.

5. Creating and Sending SDP Offer

Once the event handlers above are attached to the peerConnection, it's possible to send an SDP offer and initiate the actual 2-Way Audio call.

First create the offer and set it to the peerConnection:

const offer = await peerConnection.createOffer();

await peerConnection.setLocalDescription(offer);Next, send it to Signaling Services. Use deviceId and mediaType from the feeds object here, as well as the localDescription from the WebRTC peerConnection object.

await signalingServiceWebSocket.send(

{

destination: {

type: 'service',

id: 'talkdown',

routeHint: deviceId,

},

payload: {

eventName: "createSession",

eventData: {

deviceId: deviceId,

sessionDescription: peerConnection.localDescription,

mediaType: mediaType

},

},

});Lastly, handle the sessionCreated event from Signaling Services.

{

"source": {

"type": "service",

"id": "talkdown"

},

"payload": {

"eventName": "sessionCreated",

"eventData": {

"sessionDescription": sessionDescription

}

}

}When this event is received, the call is functioning as soon as the eventData.sessionDescription value is assigned to the WebRTC peerConnection object. The event listener should be registered before sending the createSession message to prevent any chance of a race condition.

let handle = async (eventData) => {

await peerConnection.setRemoteDescription(eventData.sessionDescription);

// Connected!

}6. Closing the Call

To end the call, either because the user wants to or because of an error, call close() on the WebRTC peerConnection if it is not null, and then disconnect from Signaling Services.

peerConnection.close();

signalingServiceWebSocket.close();

NoteThe maximum length of an audio session is 10 minutes. After 10 minutes session will be disconnected with an error.

7. Handling Errors and Disconnects

All errors share a common format. You can trust that when an error occurs, the payload.eventName property of the web sockets message will always be error. It is advisable to disconnect and reset the client whenever an error occurs as this is the expected behavior from the service standpoint.

If your client receives a message from Signaling Services where the payload.eventName is disconnect, close and reset the WebRTC and Web Sockets connections before attempting another call. Additionally, if the WebRTC connection closes for any reason, close and clean-up the call to bring it back to it's initial state (IE: destroy objects and listeners and then reinstantiate them).

An example of an authorization failure:

{

"source": {

"type": "service",

"id": "edge",

},

"payload": {

"eventName": "error",

"eventData": {

"status": "unauthorized",

"context": "authorization",

"message": "Token not valid",

"detail": {

"reason": "tokenIsNotValid",

}

}

}

}There are multiple "reasons" located in the eventData.detail.reason value. They give more information about why an error occurred, which can be enumerated as such:

busyinvalidRequestserviceUnavailableauthorizationFailedpermissionDeniedresourceNotFound

Generally speaking, it's best to disconnect and reset the client when an error occurs.

Busy StateIn 2-Way Audio, it's possible to receive an error when making a call because the remote end is "busy". In this case, you could tell your end user that the speaker is busy by looking at the reason from the response data.

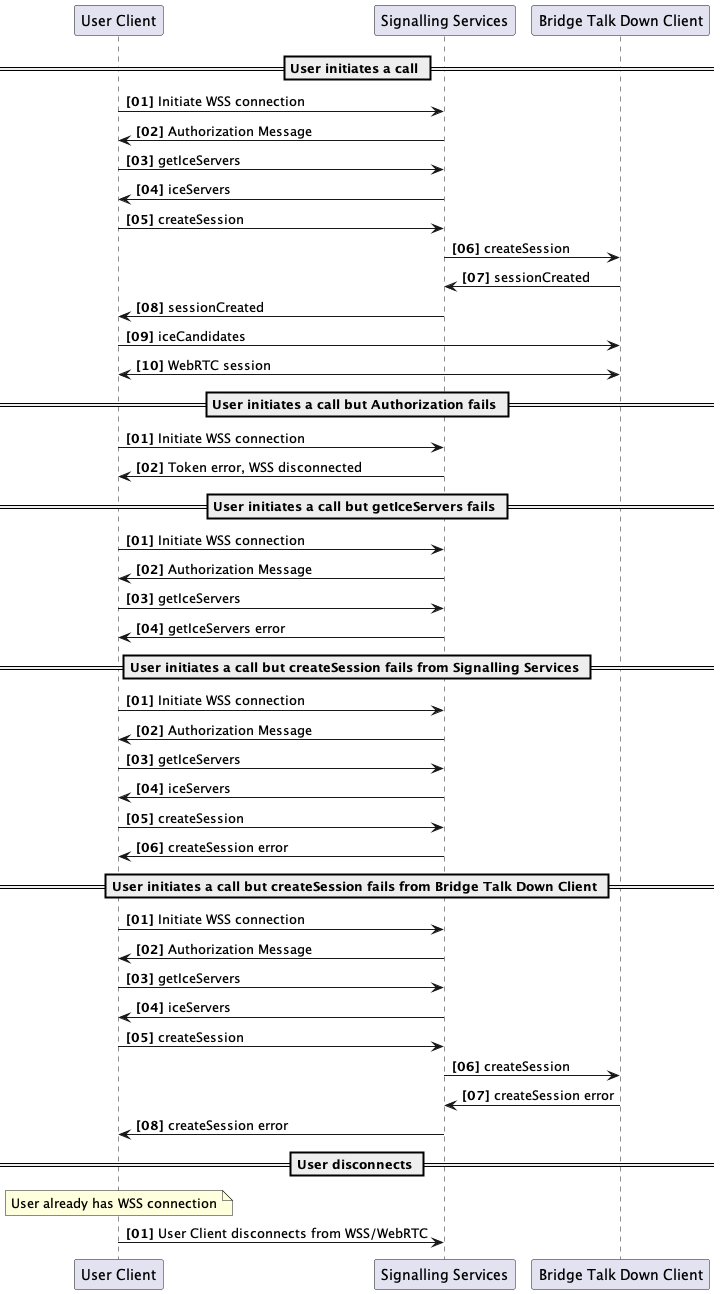

Sequence Diagram

Updated 5 months ago